Matthew Cobb: Why did it take 200 years to find out where babies came from? How we discovered the secret of sex.

Saturday 9th March 2024 • Hebden Bridge Town Hall • 7:30pm • £10 • MORE INFO

Saturday 9th March 2024 • Hebden Bridge Town Hall • 7:30pm • £10 • MORE INFO

Saturday 17th February 2024 • Hebden Bridge Town Hall • 7:30pm • £10 • MORE INFO

Saturday 20th January 2024 • Hebden Bridge Town Hall • 7.30pm • £15 • MORE INFO SOLD OUT

Saturday 25th November 2023 • Hebden Bridge Town Hall • 7:30pm

Saturday 28th October 2023 • Hebden Bridge Town Hall • 7:30pm • £10 • MORE INFO

Saturday 30th September 2023 • Hebden Bridge Town Hall • 7:30pm • £10 • MORE INFO/BOOK

Friday 14th July 2023 • Hebden Bridge Town Hall • 8pm • £10/£7 • MORE INFO/BOOK

SATURDAY 18 MARCH 2023 How it went – write up by Roger Gill The Lit&Sci website says, ‘We know we’ve got it right when there’s… Read More »Prof Tim O’Brien: Multi-messenger astrophysics: new ways of exploring the universe

SATURDAY 25 FEBRUARY 2023 How it went – by Peter Lord A near capacity crowd in the Waterside Room at Hebden Bridge Town Hall were… Read More »Rachel McCormack: Chasing the Dram – the world of taste and whisky

SATURDAY 21 JANUARY 2023 What is it about this ‘everyday story of country folk’ that makes 5 million listeners tune in daily? Have you ever… Read More »Liz John: Writing for The Archers

3 DECEMBER 2022 We have many Christmas traditions, many of them plant or food based. Botanist Ian Brand takes us on a seasonal tour, encompassing… Read More »Ian Brand: The Twelve Plants of Christmas

12 NOVEMBER 2022 ‘I Preferred the Book’: what’s it like to see your novel adapted for film or television? Novelist and now screenwriter Louise Doughty… Read More »Louise Doughty

8 OCTOBER 2022 World renowned epidemiologist Professor Sir Michael Marmot did not mince his words as he presented evidence to show how government policies have… Read More »Sir Michael Marmot: The Social Determinants of Health and Health Equity

Saturday 12th March 7:30pm at Hebden Bridge Town Hall It was good to be back, after two years of lock-down, in the Waterfront Hall with… Read More »Kate Lycett: Telling Stories. Painting a Narrative.

Saturday 12th February 7:30pm online via Zoom Prof Esmail will give a brief overview of how the current problems being experienced in General Practice have… Read More »What is happening to General Practice?: Professor Aneez Esmail

Richard described his tussles with the publisher over the book’s title over concerns about public preconceptions limiting the books ‘shelf’ appeal should the wrong impression… Read More »Richard Morris: Writing, Yorkshire

Susan Owens is a freelance writer, curator and art historian – until a few years ago Curator of Paintings at the Victoria and Albert Museum… Read More »Susan Owens: The Appearance of Ghosts

Chris Renwick teaches Modern British History at the University of York; and in 2017 he achieved fame beyond the confines of the academic world with… Read More »Chris Renwick: Liberalism and the British Welfare State; its Past and its Future

Tim Birkhead launched the 2018/9 Season in great style to a hall filled to capacity. Tim delivered his information packed talk in jargon free plain… Read More »Tim Birkhead: The Most Perfect Thing; the Inside (and Outside) of a Bird’s Egg

Biomarkers – why medicine is about to undergo the biggest single change ever Professor Tony Freemont Proctor Professor of Pathology, University of Manchester and Director of… Read More »Tony Freemont: Biomarkers

Our warm shared memories of favourite children’s television colours our attitude to the BBC – the well-meaning Auntie of the nation, provider of our popular… Read More »Tom Mills: The BBC: Public Servant or State Broadcaster?

Judith Weir is one of the country’s leading composers and was appointed to the ancient office of Master of the Queen’s Music in 2014. Part… Read More »Judith Weir: A Composer’s Life

6th October 2017 Jeff Forshaw is a professor of theoretical physics at the University of Manchester, specialising in the phenomenology of particle physics. He is… Read More »Jeff Forshaw: Before the Big Bang

Or how Plato can help us deal with Donald Trump: Professor Angie Hobbs 24th March 2017 Angie Hobbs is the first academic to be appointed… Read More »Philosophy, Democracy and the Demagogue

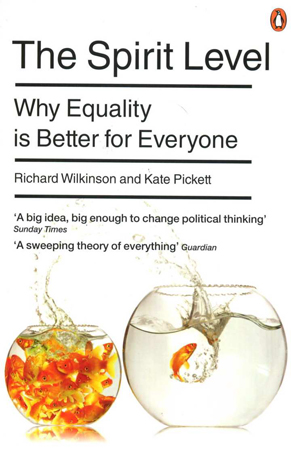

Inequality and Social Anxiety Kate Pickett and Richard Wilkinson 5th February 2017 Kate Pickett and Richard Wilkinson are Professors of Epidemiology who have collaborated for… Read More »Inequality and Social Anxiety

12th November 2016 With an artist as prolific as Picasso there are always new ways to approach his work, perhaps as many ways of looking… Read More »Dr Nicholas Cullinan: Picasso’s Portraits

Sir Mark Elder, Music Director of the Hallé Orchestra 1st October 2016 One of the oldest cultural societies in Hebden Bridge is undergoing something of… Read More »Sir Mark Elder: Are conductors really necessary?